It turns out that robots aren't very good at identifying pirated porn, TV shows, music or anything else, and that could be a serious issue for Internet companies.

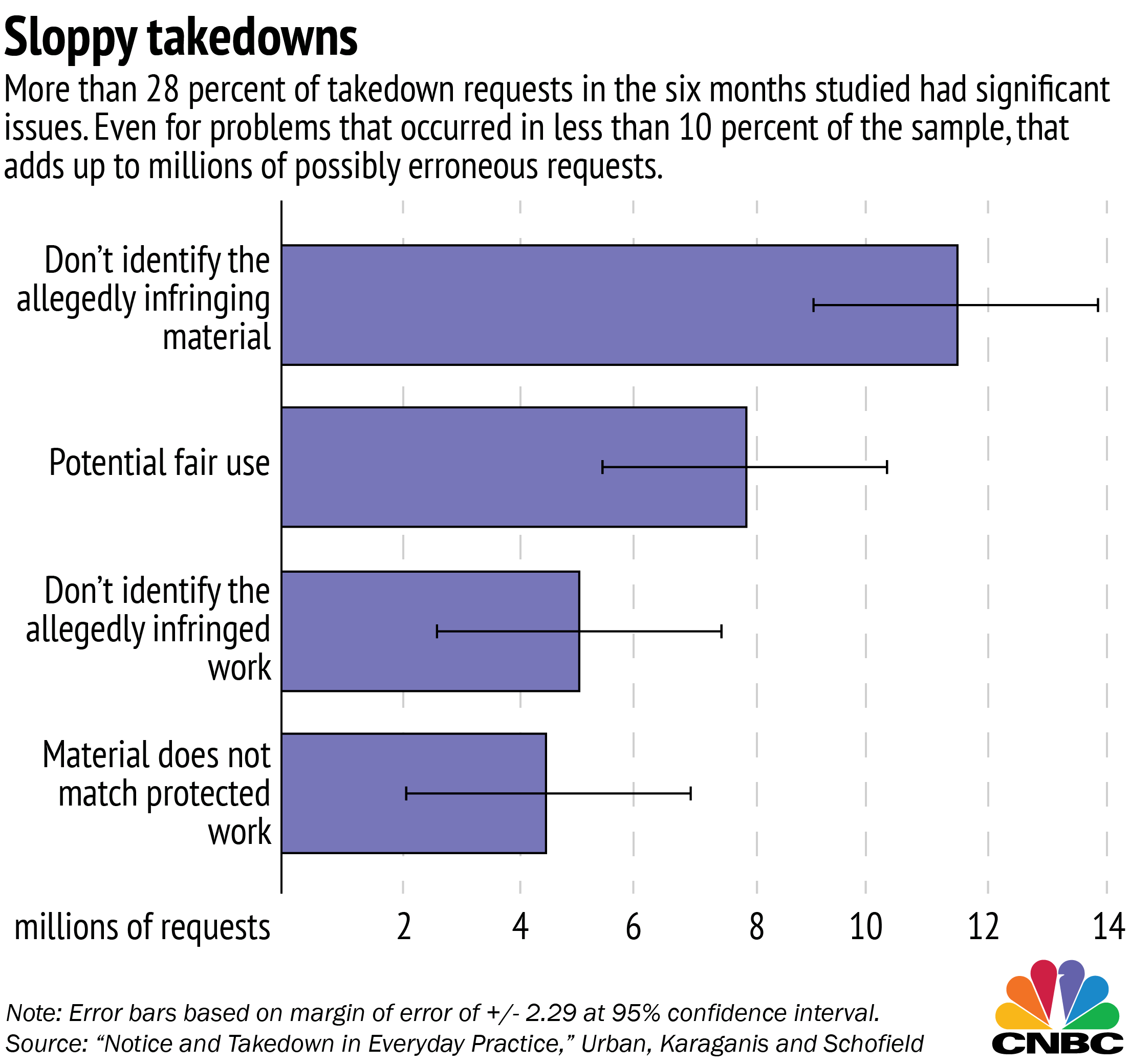

Nearly 30 percent of requests to remove copyrighted material are of questionable validity, according to a new set of studies by researchers at Berkeley Law and Columbia University. In millions of cases, the content targeted didn't even match the copyrighted work, the majority of which was music or adult entertainment.

Part of the problem are the automated systems that some of the biggest players use to catch misused material. Some of those "bots" aren't very discerning.

There's a big difference between holding the rights to a song by Usher and the movie "The House of Usher," or the HBO series "Girls" and Fox's "New Girl," or a "Lost" episode and "Extreme Makeover: Home Edition."

Yet, according to the researchers' detailed look at a database of more than 100 million requests, incorrect takedowns like those are frequently made and sometimes enforced. That's a big problem for the Digital Millennium Copyright Act procedures that are often considered the legal backbone of the Internet.